Yale-NUS alumnus contributing to AI research

Ayrton San Joaquin's work explores the interaction between AI and human users, and promoting safety in that interaction

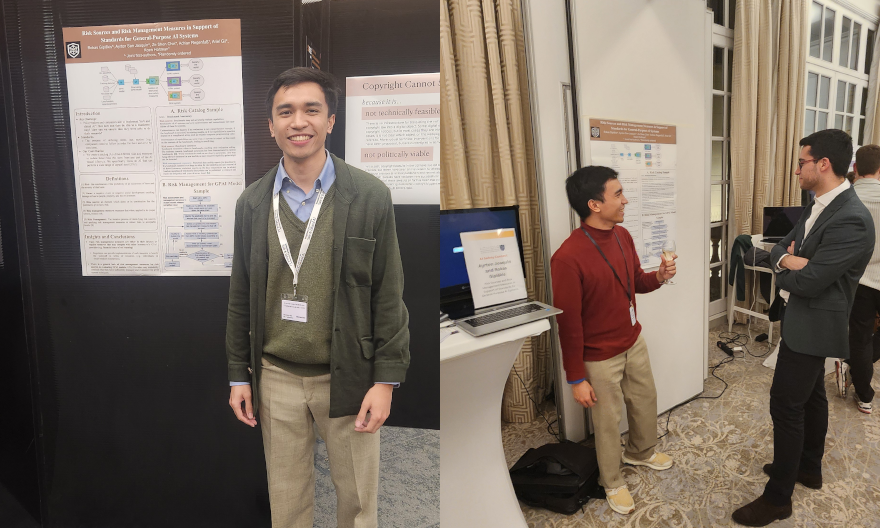

Ayrton San Joaquin (Class of 2022) presenting his poster on his paper at IASEAI’25 and AI Safety Connect. Photos provided by Ayrton.

Ayrton San Joaquin (Class of 2022) presenting his poster on his paper at IASEAI’25 and AI Safety Connect. Photos provided by Ayrton.

Given the diversity of interests, alumni of Yale-NUS College tend to pursue multiple paths after graduation. For some, this may include starting a new job in a non-profit organisation, a venture capital, or even well-established firms. For others, the academic rigour of learning does not end after leaving college but continues in the form of transformative scholarly professions. Ayrton San Joaquin (Class of 2022) is one of many alumni who have embarked on and thrived in a career in research.

At Yale-NUS, Ayrton majored in Mathematical, Computational and Statistical Sciences (MCS) and is now a researcher for AI Standards Labs. In his work, he looks at “developing risk management standards for AI in the European Union (EU) via the General-Purpose AI Code of Practice”, an area that is a cross between technical research and public policy. He shared that understanding “how AI and its development affects human users” is more important to him than “glamorous fields that break records for AI capabilities like AlphaFold or AlphaGo” because “the safety of [our] interaction with AI is an underserved area of research in terms of talent and funding”, especially in a world where AI is ubiquitous.

In February, Ayrton was invited to present a poster on his latest paper on AI, titled “Risk Sources and Risk Management Measures in Support of Standards for General-Purpose AI Systems” published in 2024, at AI Safety Connect and the inaugural conference of the International Association for Safe and Ethical AI (IASEAI’25 ), an official side event of the Paris AI Action Summit held in Paris, France. He presented alongside notable representatives from academia, civil society, industry, media, and government including Nobel Laureates and Turing awardees. These include Maria Ressa, a renowned Filipino-American journalist and CEO of Rappler, Kate Crawford, a Research Professor of AI at USC Annenberg in Los Angeles, as well as Stuart Russell, whose introductory textbook on AI helped him to understand more about the topic.

This year, the conference concluded with a call for ten critical action items that will develop AI systems that are safe and ethical. Ayrton was delighted that many people shared with him that they found his paper useful for their policy and research work, and was grateful for the opportunity to present his work alongside established academics. Reflecting on the conference, Ayrton felt there could be more international coordination, but he is “happy that there is some progress on building genuine open-source infrastructure to develop safe AI and increasing access to important resources for AI development”.

From the conference, he learnt that given the wide impact of AI, there is a need for international coordination to manage its use and development, and share better understanding of this technology.

Ayrton with Maria Ressa, co-founder and CEO of Rappler.

Ayrton with Maria Ressa, co-founder and CEO of Rappler.

Roundtable featuring Stuart Russel, whose introductory textbook on AI supported Ayrton’s work. Photos provided by Ayrton.

Roundtable featuring Stuart Russel, whose introductory textbook on AI supported Ayrton’s work. Photos provided by Ayrton.

Ayrton also believes the humanities and social sciences have a central role in AI. As a Philosophy minor who has also taken interdisciplinary modules in Yale-NUS such as Introduction to Environmental Studies, and Oppression and Injustice, Ayrton felt his undergraduate education has provided him with skills to connect disparate ideas that are essential for research. He shared that the discussions on concentrations of power, Jevons paradox (where increased efficiency of producing a resource increases the consumption of that resource rather than lowering it) and negative externalities during his classes at Yale-NUS have served him well in his work as a researcher. Through his work, Ayrton hopes to contribute to the field of AI, especially how its technology and development affect human users.